In US drivers’ organization the AAA’s 2021 automated vehicle survey, 54% of Americans say they’d be afraid to ride in an AV, while 32% are unsure how they’d feel about it.

Before convincing the public to trust them, AVs will have to demonstrate clearly that they can mimic and surpass human drivers’ capabilities.

Pilot programs like the one California just launched with Cruise to provide driverless rides to Golden State residents will play an important role in fostering more public support for AVs. But nothing will be more effective than advances in AV technology.

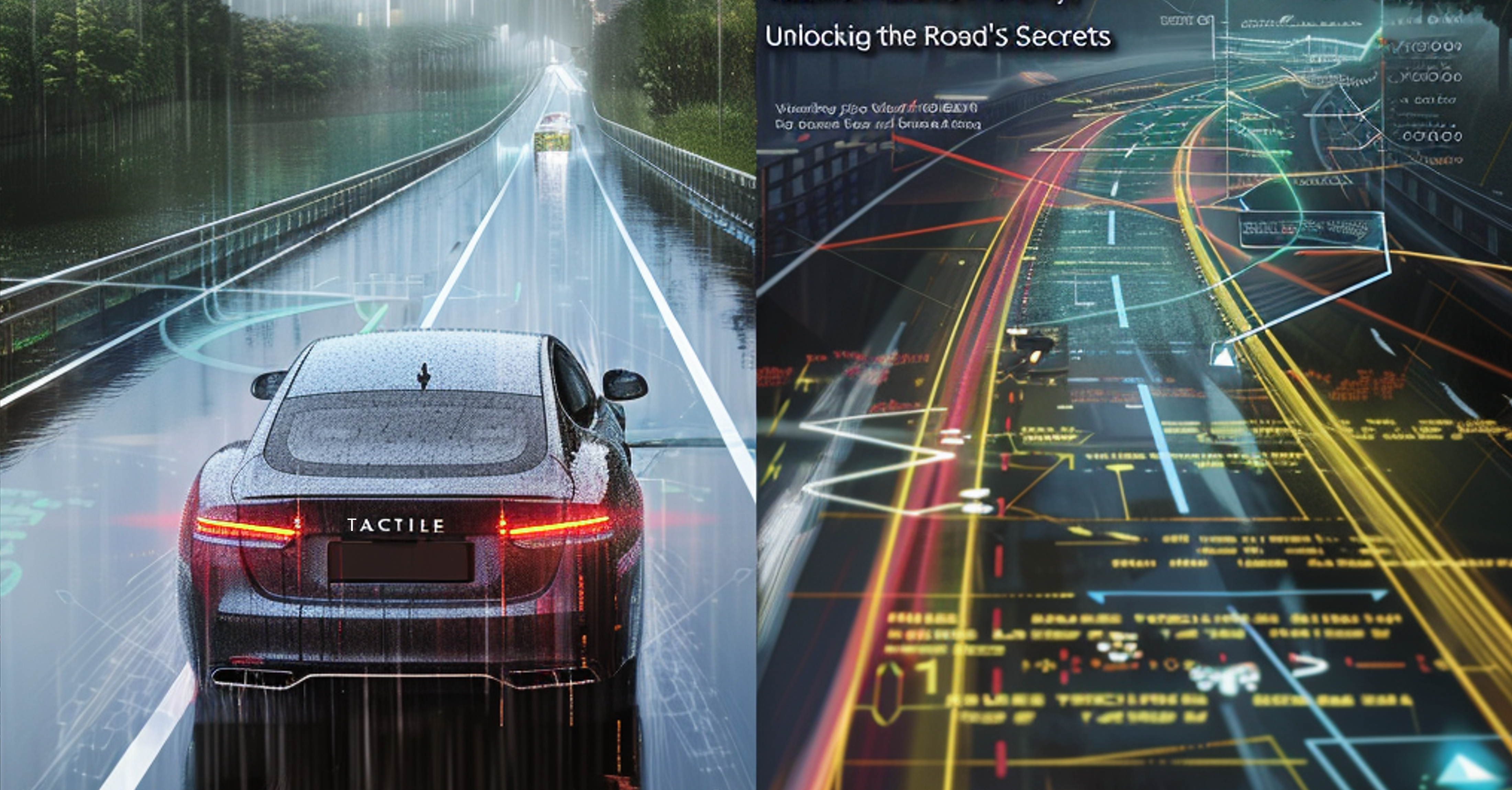

That’s what makes tactility the missing piece of the AV puzzle.

Just as human drivers can “feel” the road under their vehicles’ tires without much thought – using this perception to avoid hazards and drive more safely – AVs need to go beyond vision-based capabilities and “feel” the roads themselves.

Equipped with software-based tactile sensors that utilize information from existing physical sensors, vehicles will be able to collect insights on road conditions, including slipperiness, puddles, pavement surface quality, distresses, and more.

What could this mean for how AVs navigate roads?

Consider an AV moving through a torrential downpour. Tactile sensors could detect the impact on road friction and, harnessing machine learning algorithms, help determine the maximum speed the vehicle should drive at

to avoid aquaplaning.

The data collected by tactile sensors – on potholes and other road distresses, for example – can be used to help authorities optimize road infrastructure maintenance.

Those insights, which can be visualized in the form of crowdsourced tactile maps, detail road features like gradients, corners, normalized grip levels, and the location of potholes and other damage.

The maps offer a real-time view of driving environments and support road authorities’ and councils’ planned maintenance, live hazard detection, post-accident analysis and more.

And they transmit the insights back to vehicles, providing them with data on the road ahead, improving safety and user experience reaction time.

The tactile data on vehicle-road dynamics that can be fed into AI-based systems help with vehicle control and ADAS, enabling better driving decisions.

Adaptive cruise control, drivetrain management, and active suspension systems can be enhanced with real-time tactile sensing and data – making vehicles not only safer but also higher-performing.

By delivering technical sophistication and peace of mind to consumers, tactile capabilities will prove critical to getting self-driving cars operational.

VICE PRESIDENT OF PRODUCTS